| Toxicological and Environmental Chemistry |

|

|

|

|

| Volume 87, Number 4 / 26 December 2005 |

| Pages: | 463 - 479 |

|

|

|

|

|

|

Polynuclear aromatic compounds in kerosene, diesel and unmodified sunflower oil and in respective engine exhaust particulate emissions Joseph O. Lalah A1 and Peter N. Kaigwara A2

A1 Department of Chemistry, Maseno University, Maseno, Kenya

A2 Biomass Energy Section, Ministry of Energy, Nairobi, Kenya

Abstract:

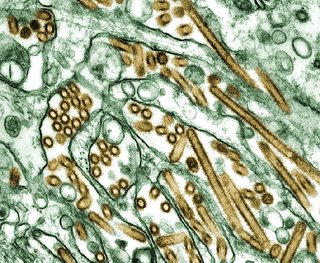

Polynuclear aromatic compounds (PAC) were characterized in diesel fuel, kerosene fuel and unmodified sunflower oil as well as in their respective engine exhaust particulates. Diesel fuel was found to contain high amounts of different PAC, up to a total concentration of 14,740 ppm, including carbazole and dibenzothiophene, which are known carcinogens. Kerosene fuel was also found to contain high amounts of different PAC, up to a total concentration of 10,930 ppm, consisting mainly of lower molecular weight (MW) naphthalene and its alkyl derivatives, but no PAC component peaks were detected in the unmodified sunflower oil. Engine exhaust particulates sampled from a modified one-cylinder diesel engine running on diesel, kerosene and unmodified sunflower oil, respectively, were found to contain significantly high concentrations of different PAC, including many of the carcinogenic ones, in the soluble organic fraction (SOF). PAC concentrations detected at the exhaust outlet indicated that most of the PAC that were present in diesel and kerosene fuels before the test runs got completely burnt out during combustion in the engine whereas some new ones were also formed. The difference between the character and composition of PAC present in the fuels and those emitted in the exhaust particulates indicated that exhaust PAC were predominantly combustion generated. High amounts of PAC, up to totals of 52,900 and 4830 µg m−3 of burnt fuel, in diesel and kerosene exhaust particulates, respectively, were detected in the dilution tunnel when the exhaust emissions were mixed with atmospheric air. Significant amounts of PAC were also emitted when the engine was run on unmodified sunflower oil with a total concentration of 17,070 µg m−3 of burnt fuel detected in the dilution tunnel. High proportions of the combustion-generated PAC determined when the engine was run on diesel, kerosene and unmodified sunflower, respectively, consisted of nitrogen-containing PAC (PANH) and sulphur-containing PAC (PASH).

>>>>>>>>>>>>>>>>>>>>>>

BrooklynDodger(s) Comments:

[Reader note: Although the Dodger has anonymized the Dodger's gender, reader(s) may wonder about the Dodger's individualism. Henceforth, the Dodger confesses to not revealing the number of bloggers who make up the Dodger. Certainly the diversity of subjects suggests more than one person, or, in the alternative, an adult ADD victim with too much time on the victim's hands. However, the Dodger(s) don't have enough time to put the (s) at the end of each usage.]

[Reader note: Although the Dodger has anonymized the Dodger's gender, reader(s) may wonder about the Dodger's individualism. Henceforth, the Dodger confesses to not revealing the number of bloggers who make up the Dodger. Certainly the diversity of subjects suggests more than one person, or, in the alternative, an adult ADD victim with too much time on the victim's hands. However, the Dodger(s) don't have enough time to put the (s) at the end of each usage.]

This paper comes form Kenyan colleagues. The problem in figuring out the least dangerous combination of internal combustion engines and fuels is a common experimental protocol for emissions. The Dodger yearns for a data set comparing natural gas v. gasoline v. diesel from engines with potential mass production control systems.

Anyway, this abstractly a 1 cylinder diesel engine, so it needs extrapolation to light and heavy duty vehicle diesels.

Diesel and kerosene are similar MW but with different refining goals and methods. The US is transitioning from high to low sulfur diesel, the Dodger might expect the Kenyans to have high sulfur. The Dodger has at times wondered the chemical form of the sulfur in diesel, here's an actual compound id'd. The Dodger has to look up carbazole.

In this contest, kerosene won, diesel fuel lost, and edible oil came out in between. The PAC's came from combustion. The nitrogen for the PAC's comes from thin air, nothing to be done about that. The sulfur in the diesel might be taken out by refining changes. Where is the sulfur coming from for the edible oil? Edible oil would have less heat content, being already partially oxidized. How they combust edible oil with such lower vapor pressure than the hydrocarbon mystifies the Dodger.

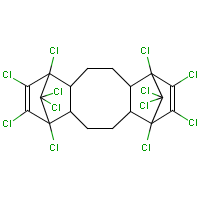

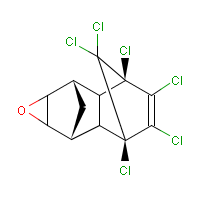

[blogger refused to upload bmp's of carbazole or dibenzothiophene, or paste the image. Picture dibenzofuran with a sulfur [for thiophene] or a nitrogen [for carbazole.] These are 6-5-6 ring heterocycles, in contrast to dibenzodioxins, which are 6-6-6 heterocycles with two oxygens.]

John Wilkes recently was biographied as the father of civil liberties for his struggles before the American war of independence. BrooklynDodger(s) haven't gotten to the end of this now being published book. One of the BrooklynDodger(s) personalities now considers Wilkes as an avatar.

John Wilkes recently was biographied as the father of civil liberties for his struggles before the American war of independence. BrooklynDodger(s) haven't gotten to the end of this now being published book. One of the BrooklynDodger(s) personalities now considers Wilkes as an avatar.